The Agentic AI Authorization Challenge

The Agentic AI Authorization Challenge

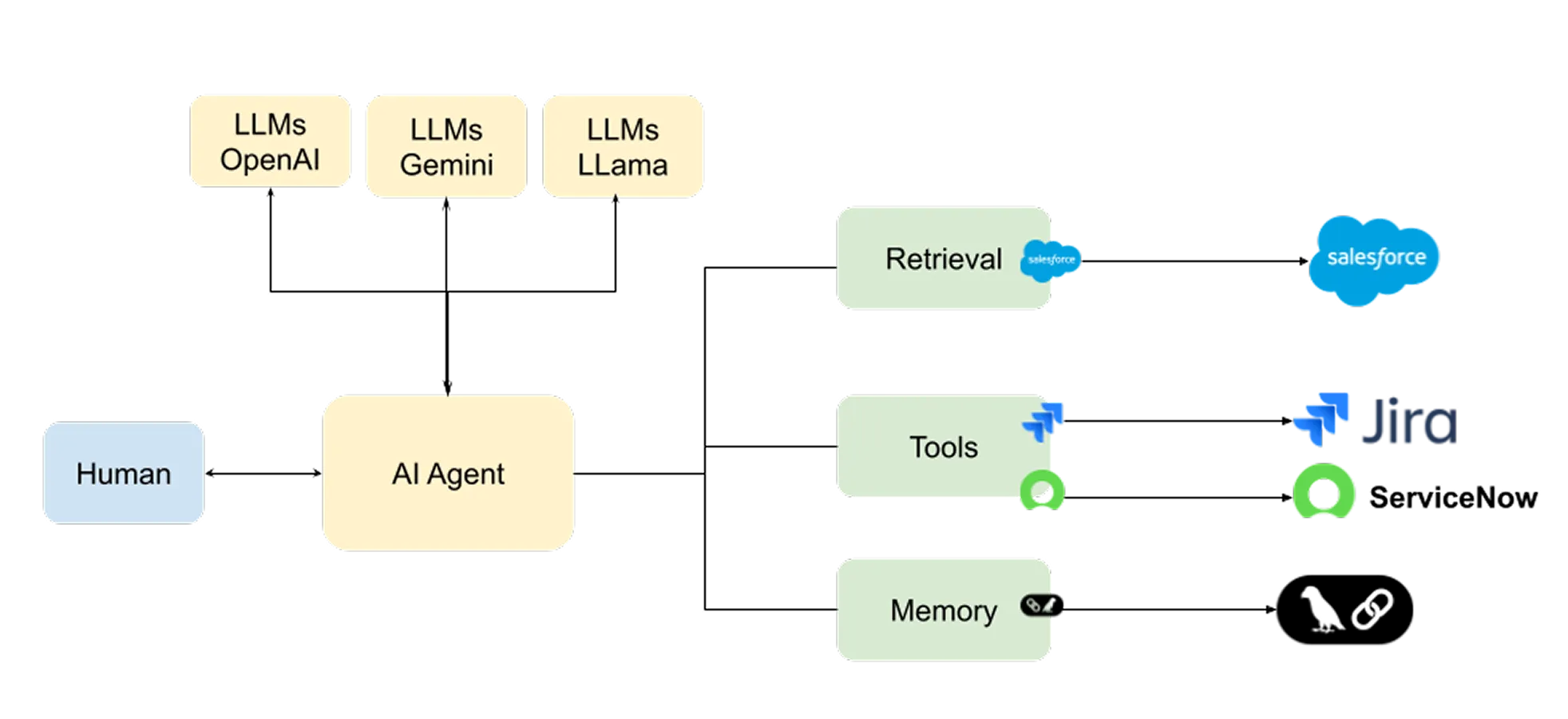

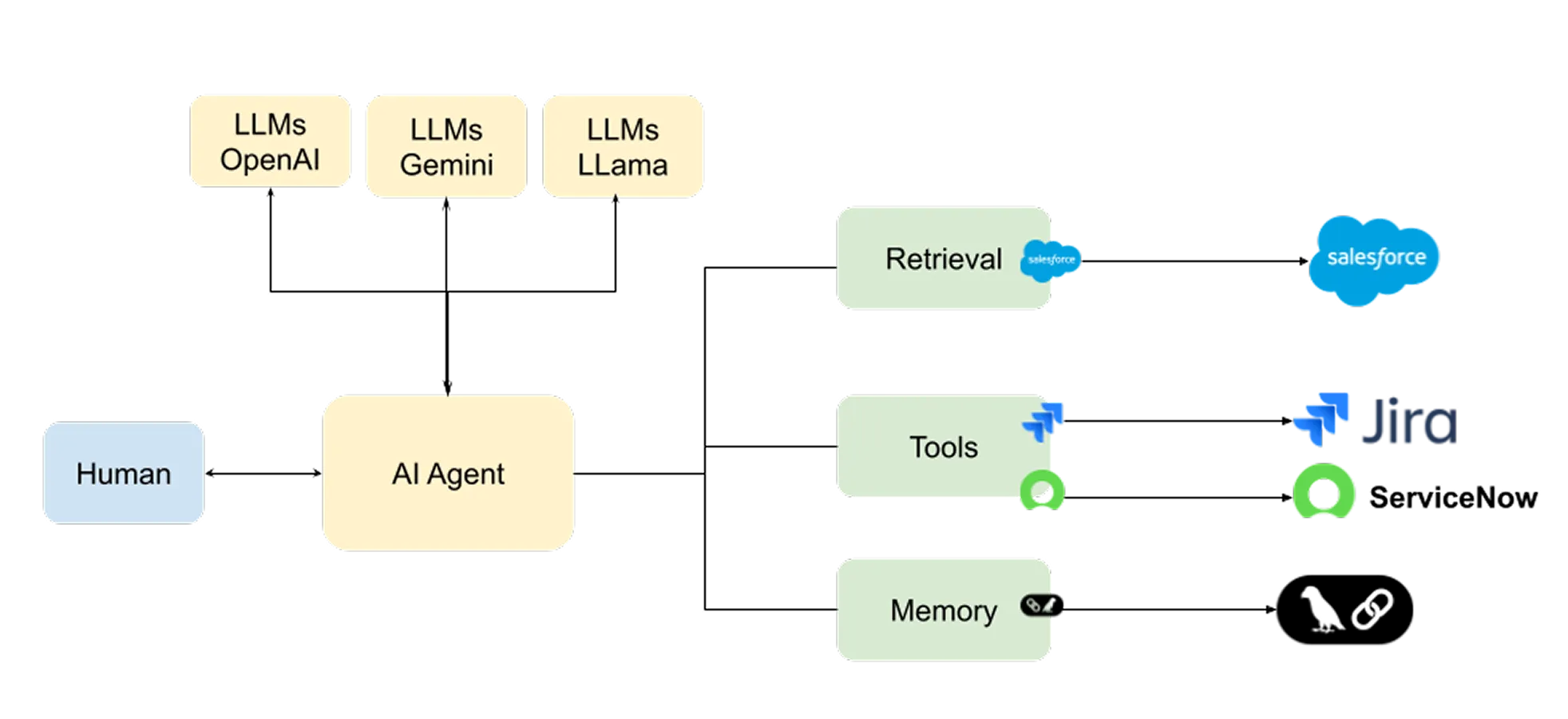

AI agents represent a paradigm shift in enterprise automation. Unlike static scripts, these agents operate autonomously, accessing and manipulating multiple applications to achieve high-level goals. They process information at machine speed, discovering and exercising the full extent of privileges available to them across hybrid environments.

While transformative, this autonomy introduces profound risks. AI agents lower the bar for compromise; they can utilize LLM reasoning to autonomously discover misconfigurations or accidentally trigger undesirable changes.

This, combined with excessive privilege, creates a new crisis for security administrators who are already struggling to enforce least privilege. Recommended Reading on Agent Architecture:

- Building Effective AI Agents | Anthropic

- Identity for AI Agents | KuppingerCole

This post dissects the security of AI agents through two key lenses: Autonomous Bots and Human-Assisted Agents, analyzing the specific identity and authorization challenges of each.

The Anatomy of Agentic Identity

To understand the risk, we must distinguish Agentic AI from existing identity models. We can categorize enterprise identities into three buckets:

- Humans: Identities tied to people. They access multiple applications, and their behavior is variable but generally bounded by human speed and intent.

- Non-Human Identities (NHIs): Service accounts, keys, or tokens for specific applications. Their behavior is predictable, fixed, narrow, and well-defined for a specific task.

- Agentic AI: A new, hybrid form of identity.

- The Hybrid Identity: Like an NHI, it uses keys/tokens. Like a Human, it accesses multiple applications and executes complex planning and reasoning.

- The Force Multiplier: Unlike a human, it can attempt to exercise every permission it has in milliseconds to solve a problem, turning minor over-provisioning into a major vulnerability.

Deployment Models & Security Implications

There are two primary ways Agentic AI is deployed, each with unique authorization profiles.

1. AI Bots (Fully Autonomous)

These agents resemble traditional application bots, but their workflows are driven by LLMs rather than hard-coded logic. Example: An AI agent conducting high-frequency algorithmic stock trading.

- The Security Implication: Admins must configure access (e.g., API keys) for every application the bot touches. Entitlements must be ruthlessly scoped. If the bot only needs to read market data, it must not have permission to write to the database, even if the LLM "thinks" it needs to.

2. Human-Interacting AI Agents (Co-pilots)

When humans interact with agents, we face a "Delegation Dilemma." For example, a customer service agent who interacts with users, assesses severity, and executes refunds across distinct billing and CRM systems. There are two models for handling such agents:

Model A: Sharing Human Identity (Delegated Authorization)

The human "shares" their authorization context with the AI agent. The agent acts on behalf of the user.

- Advantage: Maintains the principle of least privilege (the agent can only do what the human can).

- The Risks:

- Token Hijacking: Keys may lack expiration, allowing misuse after the session ends.

- Token Theft: Keys can be shared or intercepted.

- The "Super-User" Effect: Most humans are already over-privileged. A human usually stays within their "lane" of knowledge. An AI agent, however, will explore the boundaries of those privileges to solve a problem. If a user has accidental access to privileged data, the Agent could find and use this information.

Model B: Custom AI Agent Authorization (Shared Service Accounts)

The AI agent is deployed with its own set of keys (credentials) configured by an admin.

- The Challenge: This bypasses individual auditability. Every human using this Agent effectively gains the Agent's "super-powers."

- The Vendor Workaround: To fix this, vendors often suggest adding a secondary authorization layer within the AI application itself to filter what users can do.

- The Operational Reality: Enterprises already struggle to track "who has access to what." Building a parallel authorization infrastructure inside an AI tool is operationally impractical and creates a new surface area for misconfiguration.

Note: Some vendors are addressing this challenge by automating bulk authorizations (e.g., Cloudflare’s vision for AI Agents). While standards are still evolving, updates to OAuth 2.1 (short-lived tokens, mandatory PKCE, refresh token rotation) and the MCP Authorization Flow are critical steps forward.

Summary: Securing the New Frontier

Unlike standard NHIs, Agentic AI behaves more like a hyper-fast human. Anomaly detection models must shift from "single-app predictability" to "cross-app behavioral analysis."

We recommend the following immediate actions for CISOs and AppSec leaders:

- For AI Bots (Autonomous): Right-size entitlements by analyzing behavioral patterns and stitching together context from multiple environments and data sources.

- For Human-Facing Agents: Avoid shared Agent identities. Agents should operate strictly on behalf of the human (delegated access). Authorization tokens should be scoped only to the AI agent’s intended use cases, not strictly mirroring the human's full access if not necessary.

- The "Least Privilege" Imperative: Because AI Agents can exploit over-privilege faster than any human intruder, they should only have access when they need it, for what they need, and only for how long they need it.

The Bottom Line: Agentic AI offers transformative value but carries disproportionate identity risks. Proactive entitlement management and token security are no longer optional—they are the only barrier preventing your AI from becoming the weakest link in your enterprise defenses.

The Agentic AI Authorization Challenge

AI agents represent a paradigm shift in enterprise automation. Unlike static scripts, these agents operate autonomously, accessing and manipulating multiple applications to achieve high-level goals. They process information at machine speed, discovering and exercising the full extent of privileges available to them across hybrid environments.

While transformative, this autonomy introduces profound risks. AI agents lower the bar for compromise; they can utilize LLM reasoning to autonomously discover misconfigurations or accidentally trigger undesirable changes.

This, combined with excessive privilege, creates a new crisis for security administrators who are already struggling to enforce least privilege. Recommended Reading on Agent Architecture:

- Building Effective AI Agents | Anthropic

- Identity for AI Agents | KuppingerCole

This post dissects the security of AI agents through two key lenses: Autonomous Bots and Human-Assisted Agents, analyzing the specific identity and authorization challenges of each.

The Anatomy of Agentic Identity

To understand the risk, we must distinguish Agentic AI from existing identity models. We can categorize enterprise identities into three buckets:

- Humans: Identities tied to people. They access multiple applications, and their behavior is variable but generally bounded by human speed and intent.

- Non-Human Identities (NHIs): Service accounts, keys, or tokens for specific applications. Their behavior is predictable, fixed, narrow, and well-defined for a specific task.

- Agentic AI: A new, hybrid form of identity.

- The Hybrid Identity: Like an NHI, it uses keys/tokens. Like a Human, it accesses multiple applications and executes complex planning and reasoning.

- The Force Multiplier: Unlike a human, it can attempt to exercise every permission it has in milliseconds to solve a problem, turning minor over-provisioning into a major vulnerability.

Deployment Models & Security Implications

There are two primary ways Agentic AI is deployed, each with unique authorization profiles.

1. AI Bots (Fully Autonomous)

These agents resemble traditional application bots, but their workflows are driven by LLMs rather than hard-coded logic. Example: An AI agent conducting high-frequency algorithmic stock trading.

- The Security Implication: Admins must configure access (e.g., API keys) for every application the bot touches. Entitlements must be ruthlessly scoped. If the bot only needs to read market data, it must not have permission to write to the database, even if the LLM "thinks" it needs to.

2. Human-Interacting AI Agents (Co-pilots)

When humans interact with agents, we face a "Delegation Dilemma." For example, a customer service agent who interacts with users, assesses severity, and executes refunds across distinct billing and CRM systems. There are two models for handling such agents:

Model A: Sharing Human Identity (Delegated Authorization)

The human "shares" their authorization context with the AI agent. The agent acts on behalf of the user.

- Advantage: Maintains the principle of least privilege (the agent can only do what the human can).

- The Risks:

- Token Hijacking: Keys may lack expiration, allowing misuse after the session ends.

- Token Theft: Keys can be shared or intercepted.

- The "Super-User" Effect: Most humans are already over-privileged. A human usually stays within their "lane" of knowledge. An AI agent, however, will explore the boundaries of those privileges to solve a problem. If a user has accidental access to privileged data, the Agent could find and use this information.

Model B: Custom AI Agent Authorization (Shared Service Accounts)

The AI agent is deployed with its own set of keys (credentials) configured by an admin.

- The Challenge: This bypasses individual auditability. Every human using this Agent effectively gains the Agent's "super-powers."

- The Vendor Workaround: To fix this, vendors often suggest adding a secondary authorization layer within the AI application itself to filter what users can do.

- The Operational Reality: Enterprises already struggle to track "who has access to what." Building a parallel authorization infrastructure inside an AI tool is operationally impractical and creates a new surface area for misconfiguration.

Note: Some vendors are addressing this challenge by automating bulk authorizations (e.g., Cloudflare’s vision for AI Agents). While standards are still evolving, updates to OAuth 2.1 (short-lived tokens, mandatory PKCE, refresh token rotation) and the MCP Authorization Flow are critical steps forward.

Summary: Securing the New Frontier

Unlike standard NHIs, Agentic AI behaves more like a hyper-fast human. Anomaly detection models must shift from "single-app predictability" to "cross-app behavioral analysis."

We recommend the following immediate actions for CISOs and AppSec leaders:

- For AI Bots (Autonomous): Right-size entitlements by analyzing behavioral patterns and stitching together context from multiple environments and data sources.

- For Human-Facing Agents: Avoid shared Agent identities. Agents should operate strictly on behalf of the human (delegated access). Authorization tokens should be scoped only to the AI agent’s intended use cases, not strictly mirroring the human's full access if not necessary.

- The "Least Privilege" Imperative: Because AI Agents can exploit over-privilege faster than any human intruder, they should only have access when they need it, for what they need, and only for how long they need it.

The Bottom Line: Agentic AI offers transformative value but carries disproportionate identity risks. Proactive entitlement management and token security are no longer optional—they are the only barrier preventing your AI from becoming the weakest link in your enterprise defenses.